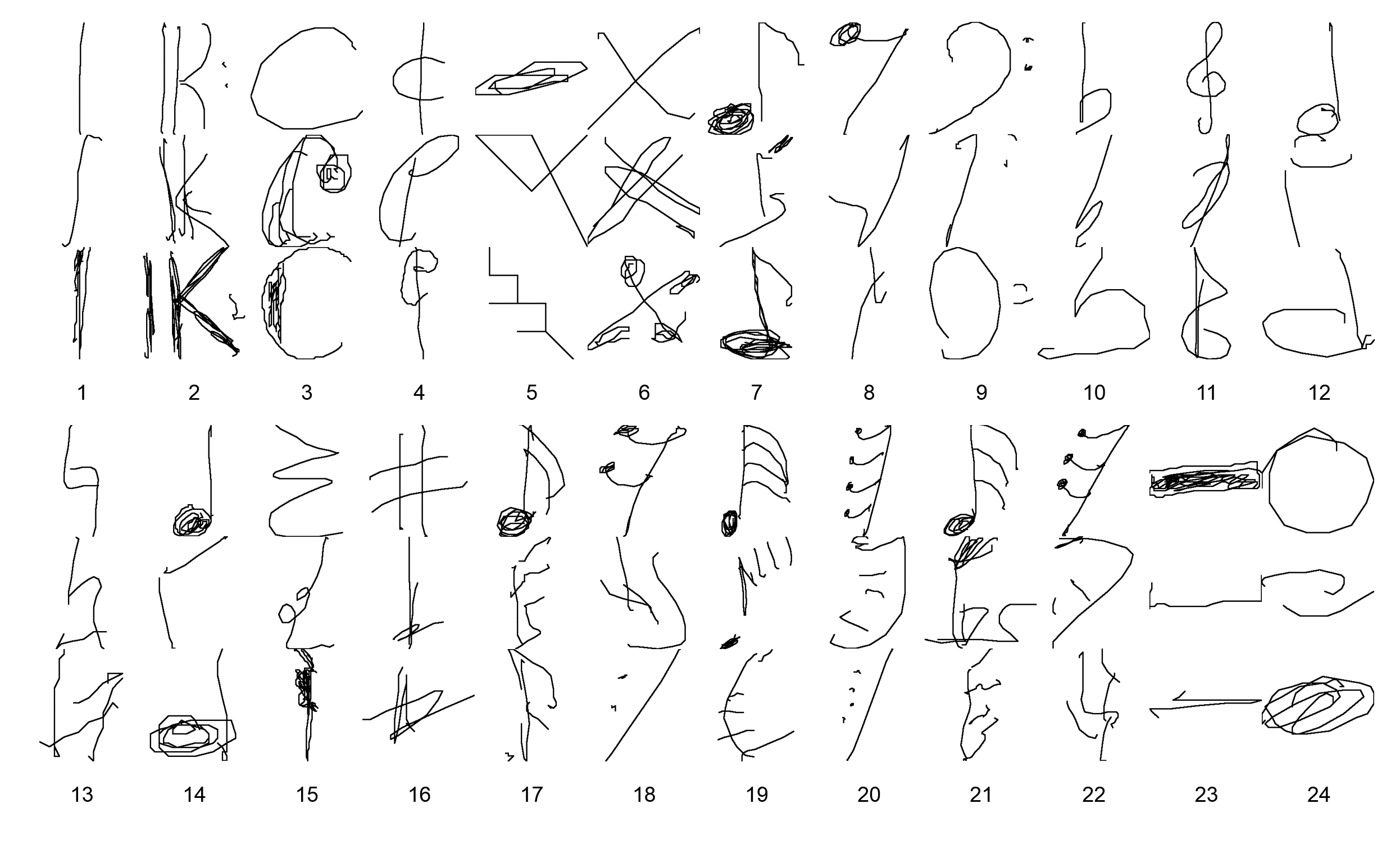

Labels of Strokes in HOMUS Dataset

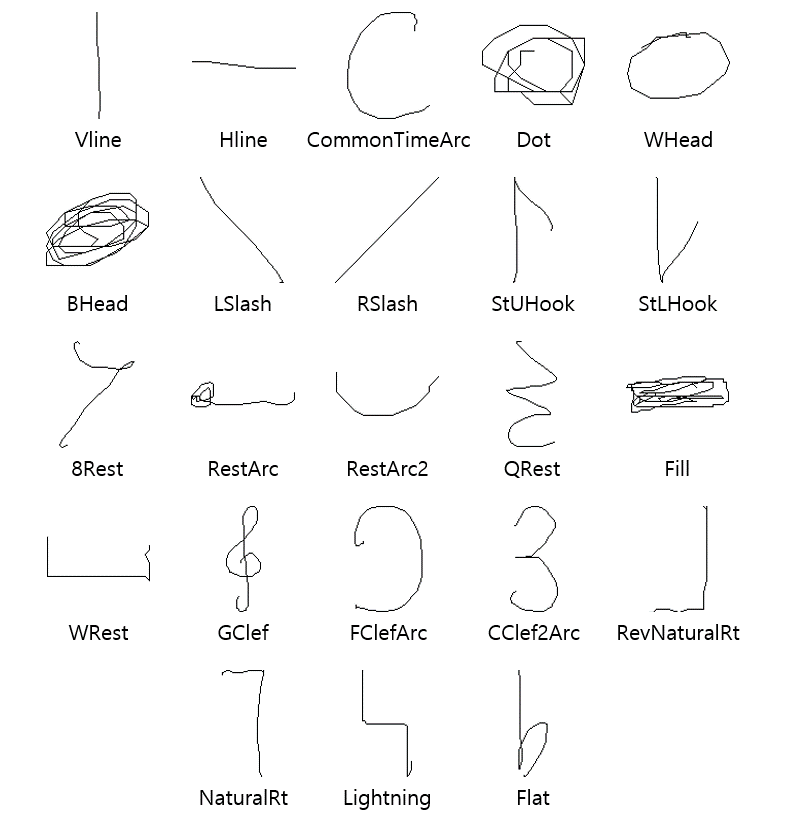

The Handwritten Online Musical Symbols (HOMUS) dataset<ref>J. Calvo-Zaragoza and J. Oncina, Recognition of Pen-Based Music Notation: the HOMUS dataset, ICPR 2014.</ref> consists of 15200 musical symbol samples collected from 100 musicians. Each sample belongs to one of 32 symbols. It can be download at the dataset homepage. Each symbol sample in this dataset consists of at least one stroke and a stroke is defined as a sequence of two dimensional points, which are the successive locations of a stylus pen on a device in time sequence while the pen touches the device. However, the dataset does not serve labels corresponding to the strokes of symbols in the dataset. Excluding 3200 symbol samples corresponding to 8 symbols of time signatures, we analyzed all of 31768 strokes in 12000 samples for 24 symbols as shown in Fig. 1 and chose 23 basic strokes in Fig. 2.

In this process, we tried to define as small number of the basic strokes as possible keeping the similarity between any pair of strokes to be as small as possible.

As a result, each basic stroke contains somewhat large variations.

With those basic strokes, we labeled all the strokes as one of the twenty four classes, which is summarized in Table 1.

Note that most samples corresponding to 24 symbols in Fig. 1 can be represented in the combinations of the basic strokes in Fig. 2.

'None' in Table 1 contains the strokes that can not be categorized into any of the 23 basic strokes.

When labeling the samples of Dot symbol, some of the samples were regarded as 'None' because their sizes were as large as BHead strokes, but this can be compensated in symbol recognition step, which was shown in a previous study<ref>H. Miyao and M. Maruyama, An online handwritten music symbol recognition system, International Journal of Document Analysis and Recognition, vol. 9, pp. 49-58, 2007.</ref>.

Each data file contains a name of symbol and the labels of its strokes in a similar manner that each symbol sample in HOMUS dataset is written in a file. The only difference is that the stroke labels are included in the files instead of raw data of strokes, which is a series of 2-dimensional coordinates. For consistency, the strokes of symbol samples corresponding to 8 time signatures are commonly labeled as 100.

| Stroke label | Stroke | Num. of strokes | Stroke label | Stroke | Num. of strokes |

|---|---|---|---|---|---|

| 0 | None | 4281 | 12 | RestArc | 554 |

| 1 | VLine | 5377 | 13 | RestArc2 | 890 |

| 2 | HLine | 1222 | 14 | QRest | 152 |

| 3 | CommonTimeArc | 810 | 15 | Fill | 324 |

| 4 | Dot | 1888 | 16 | WRest | 89 |

| 5 | WHead | 1053 | 17 | GClef | 388 |

| 6 | BHead | 2904 | 18 | FClefArc | 913 |

| 7 | LSlash | 3662 | 19 | CClef2Arc | 161 |

| 8 | RSlash | 3719 | 20 | RevNaturalRt | 35 |

| 9 | StUHook | 362 | 21 | NaturalRt | 262 |

| 10 | StLHook | 1211 | 22 | Lightning | 98 |

| 11 | 8Rest | 1151 | 23 | Flat | 262 |

We have developed an online algorithm for handwritten musical symbol recognition using the labels of strokes. The algorithm is described in our paper<ref>J. Oh, S. J. Son, S. Lee, J.-W. Kwon, and N. Kwak, Online Recognition of Handwritten Music Symbols, International Journal of Document Analysis and Recognition, vol. 20, pp. 79-89, June 2017.</ref>.

References

<references />

Contact

Sung Joon Son, Ph.D. candidate, E-mail: sjson718_at_snu_dot_ac_dot_kr